After 5 days shooting a big banking expo in Boston, I’ve had loads of fun ‘camera spotting’.

Shooting at exhibitions is one of my main activities, and the mixture of run & gun, pack shots, interviews, talking heads and candid videography provides us with very strong opinions of what kit works well. Even down to choice of batteries or lighting stands. And what to wear. But I digress.

I saw big ENG cameras, little GH4s, loads of iPads (!) and even a brave soul with a tricked out Black Magic Cinema camera complete with beachtek audio interface, follow focus and matte box. It’s not the first time I’ve seen a BMCC used at a trade show, but to my mind it does take a bit of a leap of faith to wrestle one of these specialist cameras into service in such an unforgiving environment.

Expo shooting requires kit that doesn’t wear you down – you’ll be on your feet all day, and scooting from booth to booth. You don’t want to be weighed down by too many batteries, and you’ll need plenty of media. Plans change, things happen, and sudden opportunities crop up that might be missed if you need to change lenses or power up a complex camera. Everything has to be quick to deploy yet reach your expectations in picture and sound quality.

In many scenarios, you might not even have a secure base from which to keep bags, chargers and ‘special’ kit (long XLR and power cables for example). Suddenly, you’re in a sort of quasi-military mode, where you’re scooting around with the camera, a tripod, a lamp, some sound kit AND your backpack full of editing machine, hard drives, plus all the other bits and bobs you’ll need throughout the day. 12 kilometres per day with 40lb strapped to your back isn’t quite in Marines territory, but even so…

My go-to camera on these gigs has been the Sony EX1-R, and the magic addition of a spider dolly on the tripod – the little shopping trolley wheels. This enables you to scoot your camera and tripod around, whilst carrying an XLR cable, 416 stick mic and Sanken COS-11 lapel mic, headphones, batteries and water bottle in a ScottEvest ‘wearable office’.

Pretty much every videographer I meet looks at the spider dolly and immediately wants one. It truly adds so much value to your day’s work – and if the floor surface allows it, you can even get a few nice ‘dolly moves’ too – tracking shots, rotating around someone or something – though not all surfaces are up for it.

Due to the cost of carnets, shipping and so on, I rented my main camera from Rule Boston: a Sony PMW300. This is the replacement to the venerable Sony EX3, and bridges the gap between my EX1’s ‘little black sausage of joy’ design and a traditional shoulder mount ENG camera. I became very enamoured with the PMW300’s shoulder mounted ergonomics, thanks to its clever viewfinder design. The EVF is removable, unlike the EX3, so it can be packed into a airline carry-on bag or Peli case, with room for accessories, batteries, charger, etc.

It seemed to be almost a stop faster than the EX1, though I have not put them side by side. I didn’t seem to be using +3 and +6db gain as much as I do with the EX1, and shooting time-lapse outdoors with a 16 frame accumulation shutter actually required -3dB as I ran out of ND and didn’t want to go above f8 on the iris due to diffraction.

There was a little more heft than an EX1, but the pull-out shoulder pad and well placed EVF provided good balance and took some of the weight and strain off the right hand. All controls fell under my fingertips – it’s ‘just another Sony’ in that respect. Even though it was my first time with the camera, under stressful conditions, I was never hunting for controls or jacks. Switching between 1 mic at 2 levels, and two mics with independent level control, to mic plus line feed from mixing desk was simple and quick. I couldn’t say for sure if the mic pre-amps were better than the EX1-R, but I was never struggling with gain/noise even though expos are notorious for horrendous external noise.

There have been some changes to the menu structure, and flipping between ‘timelapse’ mode and ‘candid’ mode required a few more steps than I thought was necessary. Choosing the accumulation shutter requires a walk through all the available speeds rather than it remembering your settings and using a simple on/off toggle. Small point, but it makes a difference for operators in our game.

The PMW300 came with the K1 choice of lens – like the EX3, you can remove it and replace with a wide angle zoom or the new K2 option of 16x Funinon zoom. It doesn’t go any wider – just provides you with a little extra reach at the telephoto end. In the world of expo videography, wide angles are very valuable, though. Often you’re at such close proximity it’s hard to get the ‘scope’ of an expo booth, and as you pull back, your foreground fills with delegates.

This is why I brought my Canon C100 with me. It got a lot more use than I thought. I brought it primarily for talking heads and interviews – for that S35 ‘blurry background’ look with my 17-55 f2.8 and 50mm f1.4. In fact, most of the time, it wore my Tokina 11-16mm wide angle, which did wonderful things for the booth shots. Sony’s PMW Fujinon lens design has quite a bit of barrel distortion at the wide end, and I remember the ‘shock and awe’ of using the Tokina for the first time on my Canon 550D – very good distortion control and tack sharp.

We also had a few presentations to capture with two cameras – the C100 unmanned on the wide, whilst I operated the PMW300 on close-up. These setups were in dark, dingy and drearily lit ‘breakout’ rooms barely lit by overhead fluorescents. Absolutely no chance of extra lighting. This could have been a disaster with ‘panda eyes’ on presenters, but both the C100 and the PMW300 have gamma curves which really help in these circumstances.

This brings us neatly to another ‘trick’ – after all, we have two very different cameras from two separate manufacturers – how on earth are we going to match them?

Whilst I probably wouldn’t want to attach the two to a vision mixer and cut between them live, I could get both cameras surprisingly close in terms of picture ‘look’ by using CineGamma 3 on the PMW300 and Wide Dynamic Range on the Canon. I also dialled in the white setting by Kelvin rather than doing a proper white set. The Canon’s screen cannot be trusted for judging white balance anyway – you need a Ninja Blade or SmallHD or similar trustworthy monitor for that. The Sony’s screen is a little better, but with a slight green tint that makes skin tones look a little more yellow than they appear on the recordings. I don’t mess with the colour matrix on either camera because you need trustworthy charts and constant access to a grade 1 or 2 monitor to do that – and this is where you’d be able to match both cameras for seamless vision mixing.

Suffice to say that in these circumstances, we need to achieve consistency rather than accuracy, so one simple colour correction on each camera will bring them both to a satisfactory middle ground. That’s how the Kelvin trick with the CineGamma 3 and WDR works. Neither are perfect ‘out of the box’ but they are sufficiently close to nudge a few settings and do a great match.

But once again, here’s another important learning point. Because we were creating a combination of Electronic Press Kits, web-ready finished videos and ‘raw rushes’ collections, our shooting and editing schedules were tight. We’d shoot several packages a day, some may be shot over a number of days. We didn’t have time for ‘grading’ as such. So bringing the Sony and the Canon together so their shots could cut together was very important.

The Canon C100’s highlights were sublime. Everything over 80IRE just looked great. The Sony’s CineGamma was lovely too, but the Canon looked better – noticeably better when you’re shooting ‘beauty’ shots of a booth mostly constructed out of white gauze and blue suede. The PMW300 did a great job, and really you wouldn’t mind if the whole job was captured on it, but the C100 really did a great job of high key scenes. Such a good job that I’d want to repeat the PMW300/C100 pairing rather than a double act of the PMW300 with a PMW200. If you see what I mean.

There was one accessory that we ‘acquired’ on-site that deserves a special mention. It’s something that I’ll try and build into similar shoots, and to any other shoots I can get away with. This accessory really added a layer of sophistication and provided a new kind of shot not necessarily seen in our usual kind of videos. The accessory is not expensive to purchase, but there are costs involved in transport and deployment.

This new accessory – this wonderful addition to any videographer’s arsenal of gizmos is… a ladder. Take any shot, increase the height of the lens by about a meter or so, and witness the awesomeness! Yes, you need (you really must have) a person to stand by the ladder, keep crowds away, stabilise it, be ready to take and hand over your camera, but… wow. What a view!

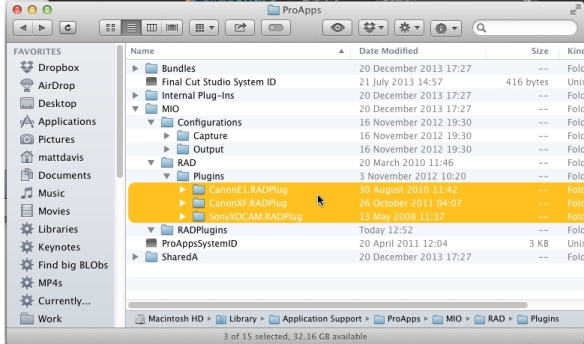

If left for a few minutes (or longer), it would shut down. Powering up again brought everything back. If you held the power button down for >5 secs, it would power down but do the same thing. Hmmm. Clue.

If left for a few minutes (or longer), it would shut down. Powering up again brought everything back. If you held the power button down for >5 secs, it would power down but do the same thing. Hmmm. Clue.